Consider a collection of random variables described by a joint probability distribution. Often, in any field with probability or statistics, one faces the task of simulating these random variables, which typically depend on each other in some fashion.

A now standard way for simulating or sampling such random variables is to use the Metropolis-Hastings algorithm, undoubtedly the cornerstone of Markov chain Monte Carlo methods. This method creates a discrete-time Markov chain that has a stationary or invariant distribution being the aforementioned distribution.

The algorithm was born out of a 1953 paper by Nicholas Metropolis, Arianna W. Rosenbluth, Marshall Rosenbluth, Augusta H. Teller, and Edward Teller (two husband-wife pairs), who looked at a special case, and a 1970 paper by W.K. Hastings, who generalized the method. It is typically called the Metropolis-Hastings or the Metropolis algorithm. And some have called it the M(RT)2 H algorithm.

(The history is a bit complicated, but perhaps we should drop the name Metropolis. The late Arianna (née Wright) Rosenbluth did most of the work. She was also a dab hand at fencing.)

Although the algorithm’s initial adoption and use was slow, taking decades partly due to slower computers, the Metropolis-Hastings algorithm is now a widely used method for simulating collections of random variables. This in turn gives fast ways for exploring, integrating, and optimizing otherwise unwieldy mathematical functions, such as those found in Bayesian statistics, machine learning, statistical physics, and combinatorial optimization. The algorithm serves as the foundation for other random simulation methods, such as the Gibbs sampler, hence it’s been called the workhorse of Marko chain Monte Carlo methods.

There are many books, articles, lecture notes, and websites describing the Metropolis-Hastings algorithm; see the Further reading section below. But I’ll detail the core ideas here. This post is designed to be somewhat self-contained, but it arose from a series of posts, starting with this one and ending with this particularly relevant one.

Constructing a Markov process

Take a collection of \(n\) random variables \(X_1,\dots,X_n\) with a (joint) probability distribution \(\pi(x)=\pi(x_1,\dots,x_n)\). This distribution will either be a (joint) probability mass function or (joint) probability density for discrete or continuous random variables, respectively.

We wish to construct a Markov chain on an abstract mathematical space \(\mathbb{X}\). We assume we can write a point \(x\in \mathbb{X}\) as \(x=(x_1,\dots,x_n)\). More specifically, the space \(\mathbb{X}\) is a Cartesian product of spaces \(\mathbb{X}_1,\dots,\mathbb{X}_n\) on which the variables are defined.

Which mathematical space is \(\mathbb{X}\)? That will, of course, depend on the random variables you’re trying to simulate. But it’s usually the lattice \(\mathbb{Z}^n\), Euclidean space \(\mathbb{R}^n\), or a subset of one of these two spaces.

For this post, we’ll assume that the space \(\mathbb{X}\) is discrete, which makes the things simpler. But the mathematics is similar for continuous spaces, with small changes such as replacing probabilities and sums with probability densities and integrals, respectively. We’ll further assume the space is finite, so we can use matrices to describe the Markov transition kernels. But everything covered here will work on infinite spaces such as \(\mathbb{R}^n\), which is the most common space used in practice.

Again, our overall aim is to construct a Markov chain with a stationary \(\pi\) being the same as the distribution that we want to sample.

Jumper process

There’s a random jumper that wants to jump around the space \(\mathbb{X}\). The jumper randomly jumps from one point in this mathematical space \(x\in \mathbb{X}\) to another point \(y\in \mathbb{X}\) according to the probability \(J(x,y)\). If the the state space \(\mathbb{X}\) is finite, then \(J\) becomes a matrix. The matrix row \(J(x,\cdot)\) is a probability mass function for each \(x\in \mathbb{X}\), so it sums to one. By definition, this random jumping forms a Markov chain.

The only thing we ask is that, for our jumper, every point \(x\) in \(\mathbb{X}\) where \(\pi(x)>0\) is reachable with positive probability in a single step. This implies the easy-to-achieve condition \(J(x,y)>0\) where \(\pi(x)>0\) for all points \(x,y\in\mathbb{X}\).

Now we have a Markovian jumper on the space \(\mathbb{X}\). But this turns out to be too much jumping for our jumper. Furthermore, the jumper is jumping more in certain directions than others. Occasionally the jumper wants to stay put (and have a rest) with the aim of balancing the jump directions.

The jumper still wants to jump sometimes from a point \(x\in \mathbb{X}\) to another point \(y\in \mathbb{X}\) based on \(J(x,y)\). But now at each time step, after choosing the jump direction but before jumping, the jumper flips a biased coin whose success probability \(\alpha(x,y)\) depends on the current position \(x\in \mathbb{X}\) and the (potential) next position \(y\in \mathbb{X}\). For the coin, the acceptance probability, which allows (or not) the jumper to move from \(x\) to \(y\), is given by

$$\alpha(x,y)=\min[1,\frac{\pi(y)}{\pi(x)}\frac{J(y,x)}{J(x,y)} ]\,,\quad x, y\in \mathbb{X}\,.$$

The function \(\alpha(x,y)\) is clearly never negative. The minimum in the above expression ensures that \(\alpha(x,y)\) is a valid probability.

Metropolis-Hastings ratio

The ratio in the expression for \(\alpha(x,y)\) is sometimes called the Metropolis-Hastings ratio, which we’ll soon see is designed specifically to balance the jump directions. The ratio means that a constant factor in the target distribution \(\pi(x)\) will vanish due to cancellation.

More specifically, if we can write the target distribution as \(\pi(x)=f(x)/C\), where \(C>0\) is some constant and \(f(x)\) is a non-negative function, then the ratio term \(\pi(y)/\pi(x)=f(y)/f(x)\). This reasoning also applies to a constant factor in the transition kernel \(M\).

The constant factor being irrelevant in the target distribution \(\pi(x)\) is very useful. It is particularly important for posterior distributions in Bayesian statistics and the Gibbs distributions in statistical physics, as these distributions typically have difficult-to-calculate constants.

Metropolis-Hastings process

The pairing of the original jumper Markov chain with the coin flipping creates a new Markov chain, which we call the Metropolis-Hastings process. What’s remarkable is its stationary distribution will be the target distribution \(\pi(x)\).

Transition kernel (matrix)

For the Metropolis-Hastings process, we can readily reason the structure of the transition kernel (matrix) \(M\) that describes this Markov chain. First we’ll look at the off-diagonal entries of \(M\).

Jumping from \(x\) to \(y\neq x\)

To jump from point \(x\) and to another point \(y\neq x\), the probability is simply the previous probability \(J(x,y)\) multiplied by the probability of that proposed jump being accepted, which is \(\alpha(x,y)\), giving

$$ M(x,y) = \alpha(x,y) J(x,y), \quad x\neq y\,.$$

Now we examine the diagonal entries of \(M\).

Jumping from \(x\) to \(x\)

There are two different ways for the jumper to remain at point \(x\). The first way is that the jumper simply jumps from \(x\) to \(x\), which happens with probability \(J(x,x)\). This proposed jump, so to speak, is accepted with probability one because \(\alpha(x,x)=1\). Consequently, we can write this probability as \(\alpha(x,x)J(x,x)\) or \(J(x,x)\).

The second way consists of all the possible jumps from \(x\) to \(z\), but then for each of those proposed jumps to be rejected, which happens (for each jump) with probability \([1-\alpha(x,z)]\). (I am using here the dummy or bound variable \(z\) instead of \(y\) for clarity.) Adding up the probabilities of these events gives the probability of the second way being the sum \(\sum_{z\in \mathbb{X}}[1-\alpha(x,z)] J(x,z) \,.\)

Consequently, for a single time step, the probability that the jumper starts at point \(x\) and remains at \(x\) is

$$ M(x,x) = \alpha(x,x)J(x,x)+\sum_{z\in \mathbb{X}}[1-\alpha(x,z)] J(x,z) \,.$$

The transition matrix \(M\) should be stochastic, so the rows sum to one, which we see is the case

$$ \sum_{y\in\mathbb{X}}M(x,y)=1\,.$$

Of course, we could have derived the diagonal entry \(M(x,x)\) immediately by starting with the above sum, but that approach lacks intuition into what’s happening.

Expression for the transition kernel \(M\)

$$M(x,y) = \begin{cases}

\alpha(x,y) J(x,y) & \text{if }

\begin{aligned}[t]

x&\neq y

\end{aligned}\\

\alpha(x,x)J(x,x)+\sum_{z\in \mathbb{X}}[1-\alpha(x,z)] J(x,z) & \text{if } x=y

\end{cases}$$

Often the above expression is written as a single line by placing an indicator function or similar in front of the sum for the diagonal entries. (In the continuous case, where the sum is replaced with an integral, a Dirac delta distribution is often used instead.)

Reversibility

A Markov process on \(\mathbb{X}\) with kernel (matrix) \(K\) is (time) reversible with respect to the distribution \(\mu\) if the following holds

$$ \mu(x)K(x,y) = \mu (y) K(y,x)\quad x,y\in\mathbb{X}\,.$$

This reversibility condition is also called the detailed balance equation. If this condition is met, then the Markov process will have a stationary distribution \(\mu\). By summing over \(x\), we can verify this because we obtain

$$ \sum_{x\in\mathbb{X}}\mu(x)K(x,y) =\mu(y)\sum_{x\in\mathbb{X}} K(y,x)=\mu(y)\,.$$

This is just the balance equation, often written as \(\mu=K\mu\), which says that the transition kernel \(K\) has a stationary distribution \(\mu \).

(Strictly speaking, we don’t necessarily need reversibility, as long as the Markov chain has a stationary distribution. Reversibility just makes the algebra easier.)

The Metropolis-Hastings process is reversible

We can show that the Metropolis-Hastings process with the transition kernel \(M\) satisfies the reversibility condition. The proof essentially just requires the swapping of rows and columns. Clearly then we only need to look at the off-diagonal entries, so we assume \(x\neq y\).

We further assume that \(\pi(y)J(y,x)\geq \pi(x) J(x,y)\). Then \(\alpha(x,y)=1\), so \(M(x,y)=J(x,y)\). Now we use this last fact in the last line of algebra that follow:

$$\begin{aligned}\pi(y) M(y,x)&=\pi(y)J(y,x) \alpha(y,x)\\ &= \pi(y)J(y,x) \frac{\pi(x)}{\pi(y)}\frac{J(x,y)}{J(y,x)}\\ &= \pi(x)J(x,y)\\&= \pi(x)M(x,y)\,,\end{aligned}$$

which shows that the reversibility condition holds with the last assumption.

But of course we can reverse that assumption, due to symmetry, so \(\pi(y)J(y,x)\leq \pi(x) J(x,y)\), then \(\alpha(x,y)=[\pi(y)J(y,x)]/[\pi(x) J(x,y)]\) and \(\alpha(y,x)=1\), implying this way also works. Then the reversibility condition holds for all cases.

We could have shown this more quickly by observing that

$$\begin{aligned}\pi(y) M(y,x)&=\pi(y)J(y,x)\alpha(y,x)\\ &= \pi(y)J(y,x) \min[1, \frac{\pi(x)}{\pi(y)}\frac{J(x,y)}{J(y,x)}]\\ &= \min[\pi(y)J(y,x) , \pi(x)J(x,y)]\,,\end{aligned}$$

which is symmetric in \(x\) and \(y\).

In summary, the Metropolis-Hastings process is reversible and has the stationary distribution \(\pi(x)\).

Continuous case

As mentioned earlier, the continuous case is similar to the discrete-case with a few modifications. For example, assuming now \(\mathbb{X}\) is continuous, then the stationary distribution is described by a probability density \(\pi(x)\). The jumper process will be described by transition kernel (function) \(j(x,y)\), where \(j(x,\cdot)\) is now a probability density for each \(x\in \mathbb{X}\).

It follows that the acceptance probability is

$$\alpha(x,y)=\min[1,\frac{\pi(y)}{\pi(x)}\frac{j(y,x)}{j(x,y)} ]\,,\quad x, y\in \mathbb{X}\,.$$

The transition kernel (function) is

$$m(x,y) = \begin{cases}

\alpha(x,y) j(x,y) & \text{if }

\begin{aligned}[t]

x&\neq y

\end{aligned}\\

\alpha(x,x) j(x,x)+\int_{\mathbb{X}}[1-\alpha(x,z)]j(x,z) dz & \text{if } x=y

\end{cases}$$

For more details, there are derivations online such as this one, as well as the sources cited in the Further reading section below.

Libraries

Of course, before writing your own code, I would check out any pre-written functions in your favourite language that already implement the Metropolis-Hastings algorithm.

For example, in MATLAB there’s the function mhsample. Again I would be using this before writing my own code, unless it’s for illustration or educational purposes.

In Python there’s the library pymcmcstat. For those with a machine learning bent, there’s also a TensorFlow function MetropolisHastings.

In R, which is a language designed for statistics, implementations of any Markov chain Monte Carlo methods will be couched in the language and notation of (Bayesian) statistics. For a specific library, I can’t say I know which is the best, but you can check out the MCMCPack library. For R libraries, be wary of using the ones that were published and are (or were?) maintained by a single contributor.

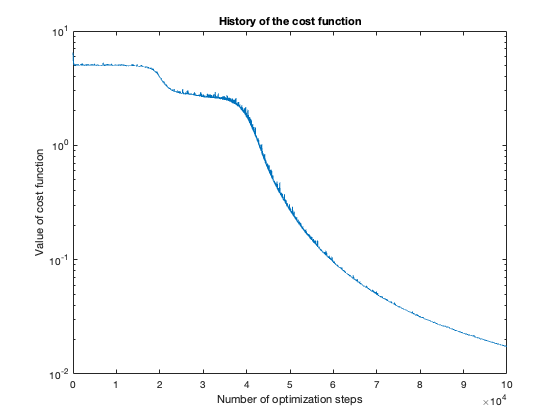

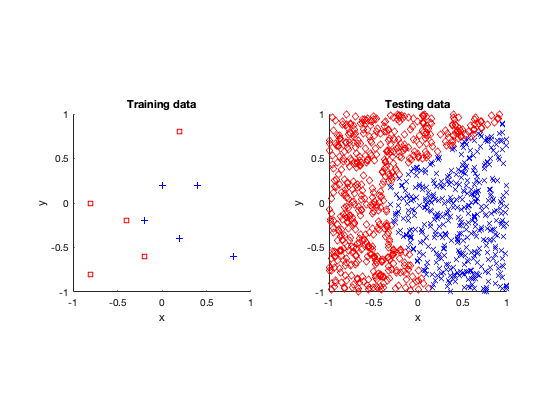

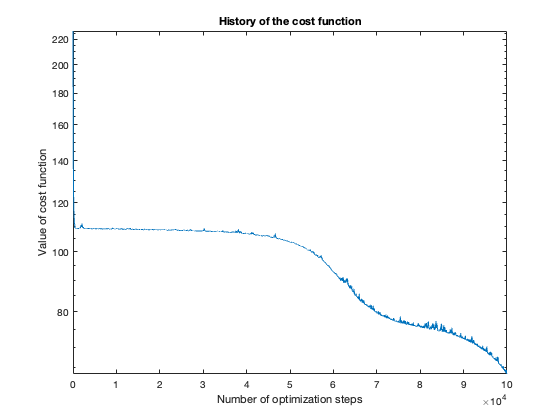

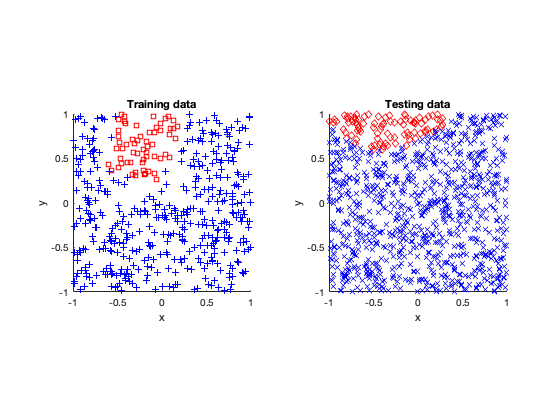

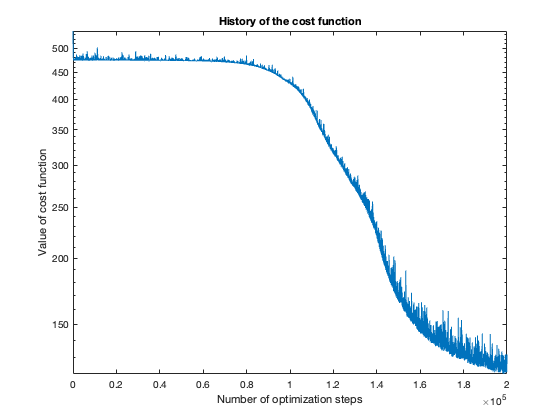

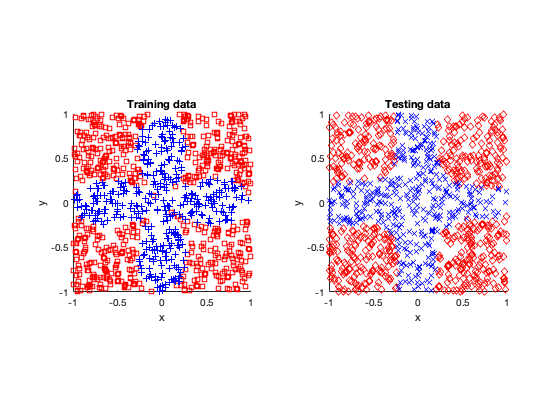

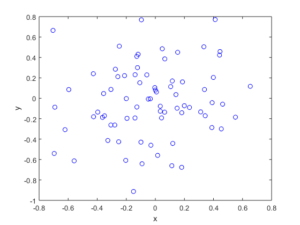

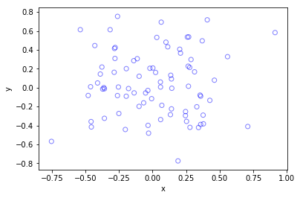

Code

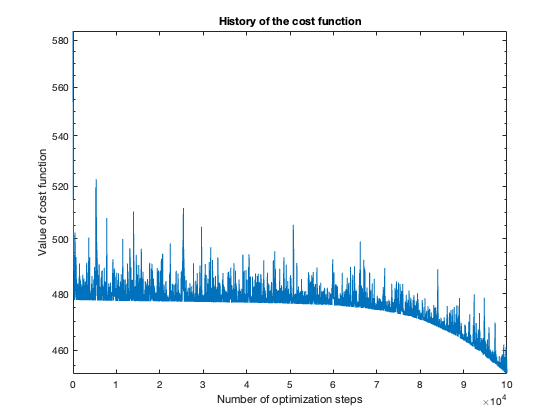

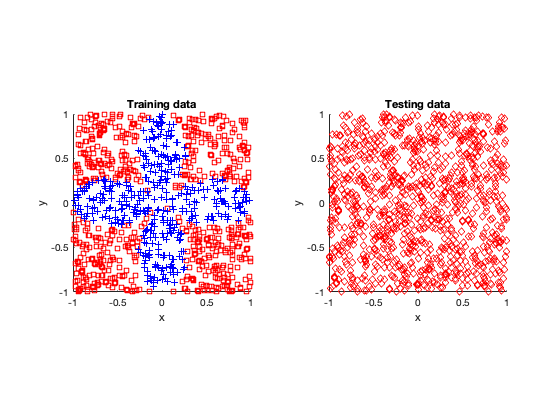

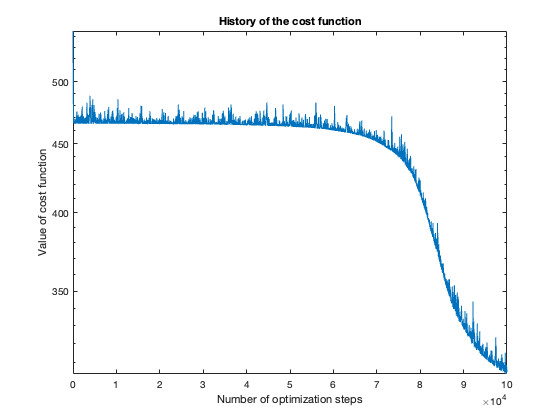

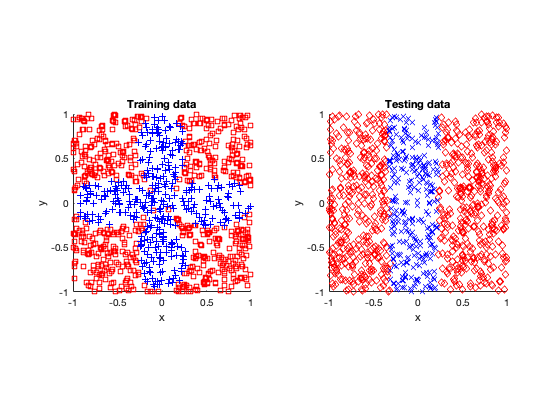

For a couple of simple examples in both one dimension and two dimensions, I’ve implemented the Metropolis-Hastings algorithm in the programming languages R, MATLAB, Python (NumPy) and Julia. The code can be found here.

Further reading

There are good articles on explaining the Metropolis-Hastings approach, as well as its history. On this topic, the articles are probably better resources than the books.

Articles

Historical

The two important papers behind the Metropolis-Hastings algorithm are:

- 1953 – Metropolis, Rosenbluth, Rosenbluth, Teller, Teller – Equation of state calculations by fast computing machines;

- 1970 – Hastings – Monte Carlo sampling methods using Markov chains and their applications.

Introductory

There are several tutorial and historical articles covering the Metropolis-Hastings algorithm. An earlier explanatory article is

- 1995 – Chib and Greenberg – Understanding the Metropolis-Hastings Algorithm.

I also recommend:

- 1994 – Tierney – Markov chains for exploring posterior distributions;

- 1998 – Diaconis and Saloff-Coste – What do we know about the Metrolpolis algorithm?;

- 2001 – Billera and Diaconis – A Geometric Interpretation of the Metropolis-Hastings Algorithm;

- 2015 – Minh and Minh – Understanding the Hastings Algorithm;

- 2016 – Robert – The Metropolis-Hastings algorithm.

The above article by Billera and Diaconis shows that the Metropolis-Hastings algorithm is actually the minimization (on the space of possible samplers) using an \(L^1\) norm. (Note that \(K(x,x)\) should be \(K(x,y)\) in equation (1.3), so it agrees with equation (2.1).)

The following article covers the Metropolis-Hastings algorithm in the context of machine learning (particularly Bayesian statistics):

- 2003 – Andrieu, de Freitas, Doucet, and Jordan – An Introduction to MCMC for Machine Learning.

For a surprising real world application, in the following article, Diaconis briefly recounts a story about a couple of graduate students using the Metropolis-Hastings algorithm to decipher a letter from a prison inmate who had used a simple substitution cipher:

- 2009 – Diaconis – The Markov chain Monte Carlo revolution.

For Markov chains on general state spaces, the following paper gives a survey of results (often with new proofs):

- 2004 – Roberts and Rosenthal – General state space Markov chains and MCMC algorithms.

History

The history of this algorithm and related methods are described in the following articles:

- 2003 – David – A History of the Metropolis Hastings Algorithm;

- 2005 – Gubematis – Marshall Rosenbluth and the Metropolis algorithm;

- 2011 – Robert and Casella – A Short History of Markov Chain Monte Carlo: Subjective Recollections from Incomplete Data.

Incidentally, the first author of the third paper, Christian P. Robert, posts regularly on Markov chain Monte Carlo methods, such as the Metropolis-Hastings algorithm, as well as many other topics.

Books

Modern books on stochastic simulations and Monte Carlo methods will often detail this method. For example, see the Handbook of Monte Carlo Methods (Section 6.1) by Kroese, Taimre and Botev. The book Stochastic Simulation: Algorithms and Analysis by Asmussen and Glynn also covers the method in Chapter XIII, Section 3. (For my remark on reversibility, see Remark 3.2 in Asmussen and Glynn.) There is also the book Monte Carlo Strategies in Scientific Computing by Liu; see Chapter 5.

Websites

There are many, many websites covering this topic. Searching around, the following link is probably my favourite, as it gives a detailed explanation on how the Metropolis-Hastings algorithm works and includes with Python code:

This post also covers it with Python code:

This site details the algorithm with R code:

Here’s a quick introduction with a one-dimensional example implemented in R: